Beyond Code Generation

Rethinking Developer Productivity in the Age of AI

Justin Cranshaw is an HCI researcher and co-founder of Maestro AI. He strives to create a more meaningful and productive future of work through software. Connect with him on Twitter or LinkedIn.

We stand at the cusp of a seismic shift in software development, and it's not just about AI writing code. Beyond the impressive feats of GitHub Copilot, there's a more encompassing transformation looming. Software development isn't a solitary act of coding; it's a symphony of collaboration, communication, and coordination. Embracing AI's true potential means recognizing its role not just in code creation but in every nuanced facet of software development.

The overemphasis on code generation is understandable. For many, programming is synonymous with software development. But the reality is far more complex. In fact, professional developers only spend a fraction of their day actually writing code. Activities like collaborating, debugging, planning, code reviewing, and communicating play equally important roles in building quality software.

To truly enhance developer team productivity, we must refocus our attention away from the solo AI coding agent and examine how an AI software developer might operate as part of a collaborative team. In this article, I turn to some of the research on how developers spend their time to dispel myths around productivity and explore how AI can optimize software development beyond just code generation. The possibilities are expansive, from automated testing to intelligent knowledge-sharing tools. However, realizing these gains requires fresh thinking on where and how AI can make the greatest impact.

A Day in the Life of a Dev: A Lot More Than Just Coding

Alex arrives at the office at 9 a.m., coffee in hand. As a professional software developer at a startup, one might imagine she’ll spend her day laser-focused, churning out lines of flawless code. The reality, however, is quite different. In fact, she spends only a small part of her day writing new code.

Alex starts her morning by catching up on Slack and email and chatting with teammates to coordinate plans for the day. Next comes triaging GitHub issues and code reviews — scanning through bugs, feature requests, and freshly submitted code from colleagues. Before diving into coding, Alex needs to understand the context and objectives for today’s tasks. This involves reviewing design documents and product specs and navigating through code to comprehend how the parts she’s working on fit into the whole system. In fact, Alex finds she spends most of her coding time just working to understand existing code.

When Alex finally starts coding in the afternoon, her work is punctuated by distractions. Slack notifications pull her attention, meetings take her away from her editor, and web searches detour her from the task at hand. She occasionally needs to debug thorny issues, which slows progress to a crawl. After powering through a few hours of productive coding, Alex takes a breather to chat with coworkers and mentally recharge. As the day wraps up, Alex tidies loose ends, sending follow-up emails and documenting the day’s progress.

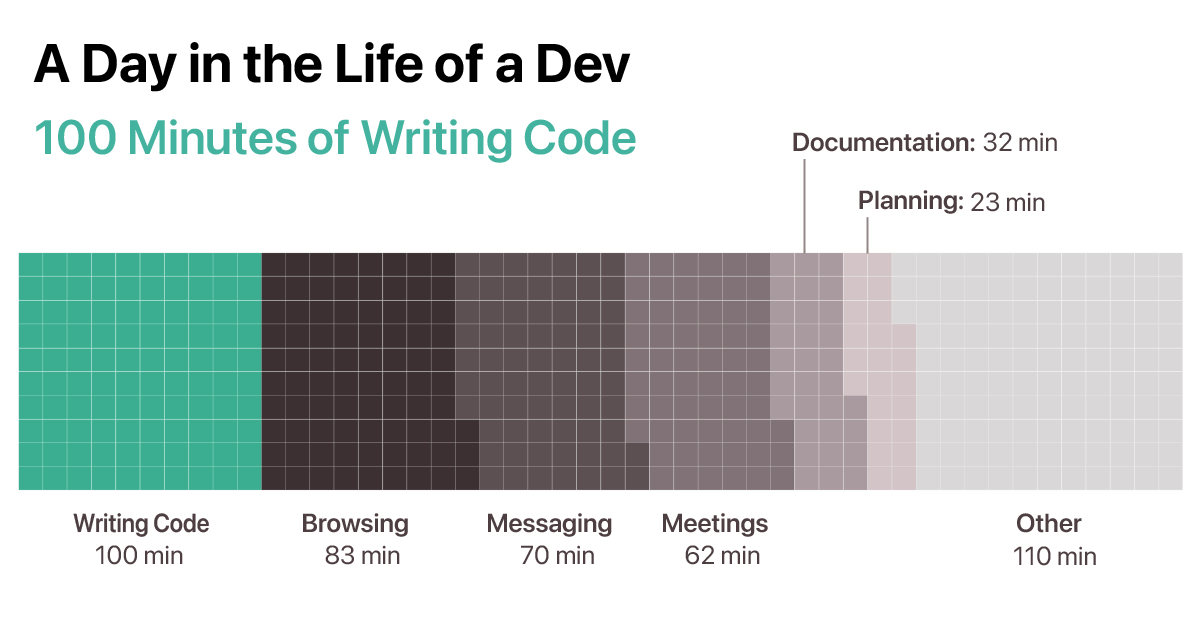

It turns out that Alex's experience is more or less the norm according to research on software developers' work patterns. For example, Meyer et al. found that developers spend only about 21% of their time writing code, with the rest of their time taken up by activities such as email/messaging (14.5%), documentation (6.6%), meetings (12.9%), planning (4.8%), browsing (17.3%), and other activities (22.9%). Digging into coding time even deeper, Minelli et al. found that 70% of the time that developers are actively working inside a code editor, they are actually reading code, not writing it. Writing code only accounted for just 5% of a developer's day in their study.

Developers also switch contexts very frequently. Studies show that workers switch tasks every 3-4 minutes on average (Mark et al., 2005, Mark et al., 2008, Murphy-Hill et al. 2019). This constant context-switching induces a high cognitive load and stress. It takes an average of 23 minutes to fully recover their attention when resuming an interrupted task (Mark et al., 2015).

AI that focuses narrowly on automating coding overlooks this bigger picture. Tools that amplify uniquely human strengths across the entire development workflow offer potentially greater productivity games. Building such tools will require a human-centered perspective on where automation can unburden developers and unlock more time for high-value creative work.

“Finding ways to improve productivity is also about finding ways to introduce more joy, and decrease frustration, in a developer’s day.” —Forsgren, et al., 2021

Developer Productivity: A Complex Equation

Measuring developer productivity is not as straightforward as simply counting lines of code produced. As a recent Hacker News thread and the linked article by Dan North highlight, exactly what makes a developer “productive” is a complex topic with many perspectives. While some focus on metrics like velocity and ticket closure rates, others emphasize softer skills like mentorship that enable organizational success.

As research has shown, productivity is a multifaceted concept dependent on many factors. While in practice, many developer teams rely on quantitative metrics to measure productivity, in fact, non-technical factors like job enthusiasm, peer support, and useful performance feedback are actually some of the strongest predictors of developers' self-rated productivity (Murphy-Hill et al., 2019).

Forsgren et al. propose a framework called SPACE that captures 5 key dimensions: Satisfaction, Performance, Activity, Communication/collaboration, and Efficiency/flow. Satisfaction relates to developer happiness and well-being. Performance refers to outcomes like quality and customer impact. Activity covers outputs like commits. Communication/collaboration looks at teamwork. Efficiency/flow examines minimizing delays. This framework highlights how productivity involves people, processes, and technologies across levels from individual to organization.

Sadowski et al. suggest considering productivity along 3 core dimensions: velocity, quality, and satisfaction. Velocity captures how fast work gets done, while quality examines how well it gets done. Satisfaction involves human factors like engagement and flow. They note these dimensions interact and can be in tension, so all need to be considered holistically.

Other researchers like Wagner and Deissenboeck emphasize the importance of measuring the value produced, not just quantity. Value depends on the software's purpose and is determined by functionality and quality. Ko argues productivity measurement may not even be necessary if managers focus on directly understanding developer challenges through close engagement.

Overall, these frameworks reveal that productivity depends on various interdependent factors. Metrics need to be custom-tailored to provide a complete picture aligned to specific goals. There is no single productivity metric that can universally quantify developer output and value generation. The complexity stems from both technical and human elements that must work together efficiently and effectively.

Beyond Code Generation: AI’s Untapped Potential for Software Teams

The narrow focus on AI code generation misses AI’s potential to transform team productivity more holistically. Returning to the SPACE framework from Forsgren et al. introduced above, we can imagine new ways AI can optimize key facets of collaborative software development beyond code generation.

Satisfaction: Automated Knowledge Management. Developers are more satisfied when they know what's going on in their team, and when they better understand how what they are working on fits into the larger context. Ensuring AI understands the context, relevance, and interrelations of vast project data is challenging. Knowledge graphs and search algorithms tailored to software development can be utilized. By categorizing, tagging, and mapping relationships between different information pieces, AI can provide developers with richer, context-aware insights.

Performance: Automated Testing. It’s universally known that increased test coverage and bug detection improves the overall performance of a system. However, manual testing is time-consuming and repetitive. AI can be leveraged to generate test cases, combine tests optimally to maximize coverage and evolve tests in response to code changes. By training AI on past bugs and test suites, it can recommend targeted tests correlating to likely failure points. AI can also perform robust regression testing overnight, freeing up developer time.

Activity: Augmented Code Reviews. Code reviews are an indispensable way to improve code quality while also maintaining adherence to team-specific standards and overarching architectural decisions. However, often pull requests can get stuck in review for longer than is necessary, dragging down the overall activity of the team. AI can be trained on past code reviews and standards within a team to provide tailored and relevant review suggestions. It can also triage code changes, flagging trivial vs. complex ones needing human review. This optimizes expert time while upholding quality and custom team standards.

Communication: Requirements Gathering. Eliciting comprehensive requirements relies heavily on team communication. AI can augment this process by digesting and analyzing documents or conversations to extract key user needs. Natural language processing allows an AI agent to translate user stories into actionable requirements definitions. Sentiment analysis ensures edge cases don't get overlooked. As requirements evolve, AI can also rapidly analyze dependencies and impact on existing code.

Efficiency: Automated Project Management. Effective project management is key to efficient execution, but it is often overlooked. Project management involves many complex, interrelated tasks that can benefit from AI automation. Intelligent assistants can generate and update comprehensive project plans and documentation. AI can also track progress, identify roadblocks, and coordinate communication for seamless team workflows. By automating routine project management responsibilities, AI can reduce the costs of context switching and enable developers to focus their efforts on higher-value work.

Overall, AI has an expansive role to play in streamlining collaboration, communication, and coordination across the software development lifecycle. But human creativity, judgment and empathy remain irreplaceable. By maximizing synergies between human and artificial intelligence, developers and teams can thrive in an AI-driven world.

Rethinking Metrics for an AI-Driven World

As we transition into an era dominated by AI tools in software development, it's crucial to reassess the metrics by which we measure success. Traditional productivity metrics might not capture the full value and nuances of AI-driven development. To ensure that we harness the transformative power of AI without inadvertently stifling innovation or sacrificing quality, we need to reframe our metrics.

From Lines of Code to Value Delivered: In an AI-driven world, the number of lines of code becomes an even less relevant measure of productivity. With AI tools capable of generating vast amounts of code quickly, it's more crucial to focus on the actual value delivered to users. This means emphasizing functionality, user experience, and the tangible benefits a software solution provides. Rather than simply tracking output volume, we could take a blended approach combining user surveys, expert reviews, and performance data to assess improvements enabled by AI-generated code. Each method has limitations, but together they can provide a more balanced perspective on value delivered.

Human-AI Collaboration Index: As AI becomes a collaborative partner, it's essential to measure how effectively developers and AI tools work together. This could involve tracking the frequency of human-AI interactions, the success rate of these interactions, and the overall satisfaction of developers with AI-driven processes. Passively tracking AI acceptance rates provides limited insights without context. It may be better to directly assess collaboration effectiveness through developer satisfaction surveys and open-ended feedback. This qualitative data can surface insights beyond what passive metrics convey.

User Experience and Feedback: In the end, software is for users. Metrics that capture user satisfaction, the learning curve associated with AI-introduced features, and the overall user experience can offer a more holistic view of a project's success. No single data source can fully capture user perspectives. A multifaceted approach combining usage analytics, passive observation, surveys, and user testing could provide rich insights into learning curves, usability, and overall experience. Each method complements the others to assemble a more complete picture.

Innovation Quotient: With AI handling more rote tasks, developers should theoretically have more time for innovation. Metrics that measure innovative outputs—be it in terms of novel solutions, features, or approaches—can shed light on the true benefits of AI-driven development. Quantifying innovation is difficult and risks negative incentives. Instead of measurement, it may be better to focus on providing time for exploration and gauging developer perceptions of creative freedom. Secondary indicators like satisfaction, time spent experimenting, and expert reviews could provide alternative perspectives on innovative progress.

In essence, the integration of AI into software development calls for a more comprehensive and nuanced approach to measuring success. As AI continues to shape the development landscape, our metrics must evolve in tandem to ensure that we're capturing the true value and potential challenges of this brave new world. Only by redefining our success criteria can we fully realize the transformative potential of AI in software development.

Conclusion: A Human-Centric Future Augmented by AI

Transforming the productivity of developer teams requires looking beyond code generation to the diverse activities comprising real-world software development. While coding is important, activities like communicating, collaborating, and understanding code play equally critical roles. Thoughtfully applied, AI can automate repetitive tasks and optimize teamwork across all of these areas. This frees up developers' time for higher-level problem-solving, leading to more rapid delivery of quality software. However, achieving these gains requires a human-centric perspective on productivity. By both automating coding and facilitating teamwork, AI can truly revolutionize software development.

References

Meyer, A. N., Barton, L. E., Murphy, G. C., Zimmermann, T., & Fritz, T. (2017). The work life of developers: Activities, switches and perceived productivity. IEEE Transactions on Software Engineering, 43(12), 1178-1193.

Minelli, R., Mocci, A., & Lanza, M. (2015). I know what you did last summer-an investigation of how developers spend their time. In 2015 IEEE 23rd International Conference on Program Comprehension (pp. 25-35). IEEE.

Mark, G., Gonzalez, V. M., & Harris, J. (2005). No task left behind? Examining the nature of fragmented work. In Proceedings of the SIGCHI conference on Human factors in computing systems (pp. 321-330).

Mark, G., Gudith, D., & Klocke, U. (2008). The cost of interrupted work: more speed and stress. In Proceedings of the SIGCHI conference on Human Factors in Computing Systems (pp. 107-110).

Mark, G., Iqbal, S., Czerwinski, M., & Johns, P. (2015, February). Focused, aroused, but so distractible: Temporal perspectives on multitasking and communications. In Proceedings of the 18th ACM Conference on Computer Supported Cooperative Work & Social Computing (pp. 903-916).

Forsgren, N., Storey, M., Maddila, C., Zimmermann, T., Houck, B., & Butler, J. (2021). The SPACE of Developer Productivity: There's More to the Story Than You Think. IEEE Transactions on Software Engineering.

Sadowski, C., Storey, M. A., & Feldt, R. (2018). A software development productivity framework. In Rethinking Productivity in Software Engineering (pp. 39-48). Apress, Berkeley, CA.

Wagner, S., & Deissenboeck, F. (2019). An integrated view on software engineering productivity. In Rethinking Productivity in Software Engineering (pp. 29-38). Apress, Berkeley, CA.

Ko, A. J. (2019). Why we should not measure productivity. In Rethinking Productivity in Software Engineering (pp. 21-28). Apress, Berkeley, CA.

Murphy-Hill, E., Jaspan, C., Sadowski, C., Shepherd, D., Phillips, M., Winter, C., ... & Jorde, M. (2019). What predicts software developers’ productivity? IEEE Transactions on Software Engineering, 47(3), 582-594.

Lines of code as a metric feel outdated, and this piece nails why. If your team’s measuring productivity, it might be time to rethink the metric.